How to Scrape Google Images Without Getting Blocked

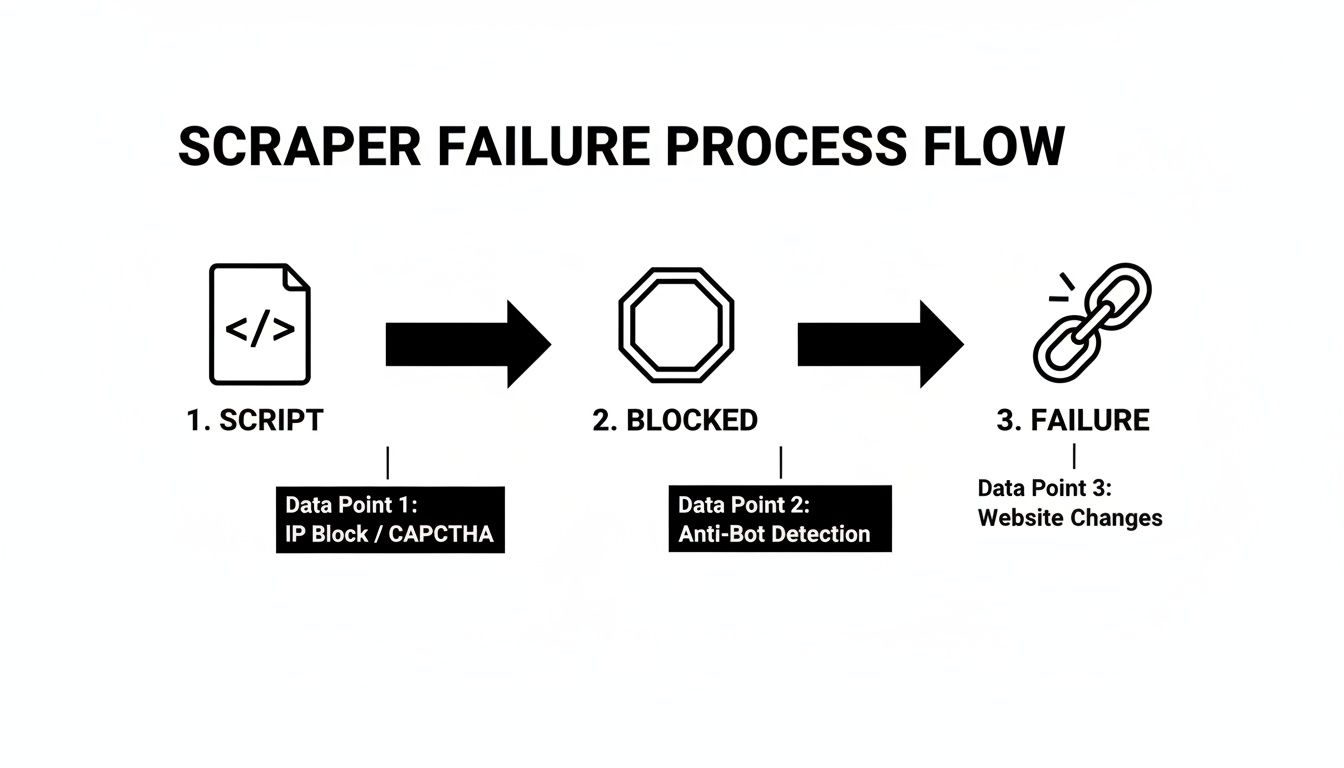

If you’re reading this, your scraper is probably dead. Your scripts are timing out, your IPs are banned on sight, and the image data you need is completely out of reach. Most guides on how to scrape Google Images are outdated theory, showing you a simple script that ignores the reality of Google's anti-bot systems.

Gunnar

Last updated -

Feb 9, 2026

Tutorials

This guide is different. We’re not SEO writers; we’re the engineers running the proxy networks that handle billions of requests. We see what works and what fails at scale. Forget the simple Python examples. We’re going to explain the operational mechanics of why you're getting blocked and what infrastructure is required to succeed.

You'll learn:

Why parsing HTML is a dead-end strategy and how to target Google's internal data APIs instead.

The connection-level fingerprints (TLS/JA3) and browser signals that expose your scraper before it even sends a request.

Which proxy types are non-negotiable and why bad rotation logic is worse than no rotation at all.

This isn't theory. This is a production playbook.

What is Google Image Scraping?

Google Image scraping is the automated process of extracting image URLs, metadata, and the images themselves from Google's search results. For data engineering teams, this isn't about downloading a few pictures; it's about building a scalable data pipeline to fuel AI model training, e-commerce intelligence, or SEO analytics. The core challenge isn't writing the script—it's designing the infrastructure to bypass Google’s sophisticated anti-automation defenses.

How It Actually Works: Operator-Level Mechanics

Success requires understanding that Google Images isn't a static webpage. It’s a dynamic, JavaScript-driven application that lazy-loads results as a user scrolls, fetching data from internal API endpoints. Your scraper's primary job is to mimic this browser behavior, not just request a URL.

This is the failure loop most teams get stuck in.

Here are the mechanics you must master:

Reverse-Engineering the Request Flow: Open your browser's developer tools (F12 > Network tab) and watch the XHR requests as you scroll. These requests hit internal Google endpoints and return clean JSON payloads containing image URLs, alt text, and source links. Your scraper should target these endpoints directly, not parse messy HTML.

Headless Browsers vs. Direct API Calls: A headless browser (controlled via Puppeteer or Playwright) can execute the necessary JavaScript to trigger these API calls naturally. While resource-intensive, it’s the most reliable method. Direct HTTP requests to the JSON endpoints are faster but require meticulous management of headers, cookies, and session tokens, which change frequently.

The Sticky Session Pitfall: A common mistake is rotating your proxy IP on every single request. This is an unnatural pattern that destroys the session state Google uses to serve paginated results. Instead, use "sticky sessions" to hold the same IP for several minutes, mimicking a real user browsing session before rotating. This significantly reduces CAPTCHA frequency. Read our guide on integrating proxies with Puppeteer for implementation details.

Concurrency and Rate Limiting: You can’t brute-force Google. Sending too many concurrent requests from a single IP, even a residential one, is a massive red flag. Your architecture must distribute requests across a large pool of IPs and enforce sane, per-IP rate limits to avoid triggering velocity-based blocks.

Proxy Types & Tradeoffs for Google Scraping

Choosing the wrong proxy type is the most common point of failure. Datacenter proxies are useless against Google; their commercial ASN ranges are flagged on sight. Your decision should be driven by the specific demands of your project.

Here's the breakdown from an operator's perspective.

Proxy Type | When It Works | When It Fails | Cost vs. Success Tradeoff |

|---|---|---|---|

Residential | The baseline for any large-scale operation. The high IP diversity and trust signals from real consumer ISPs are essential for blending in. | When rotation logic is poor (e.g., per-request rotation), the IP pool is tainted, or cookie management is sloppy. | Higher cost (per GB) but delivers the highest success rate. This is a non-negotiable requirement for scraping at scale. |

ISP Proxies | When you need a stable, high-trust IP for longer sessions. They offer the speed of datacenter with the reputation of a consumer ISP. | When you need massive IP diversity. A flagged IP can halt a process until it's manually replaced, as the pool is smaller. | Moderate cost with excellent speed. A strong choice for targeted, consistent scraping tasks. |

Datacenter | Almost never. The only potential use is scraping from another cloud provider, and even then, success is highly unlikely. | Immediately. Google's anti-bot systems identify and block IPs from commercial ASNs instantly. | Low cost, but the 0% success rate makes them a complete waste of resources. Our guide on datacenter vs. residential proxies covers this in depth. |

Investing in a quality residential or ISP proxy network isn't a luxury; it's the only way to build a reliable and scalable scraping operation.

Why You’re Still Getting Blocked

If you're using residential proxies with a headless browser and still hitting CAPTCHAs, the problem is deeper than your IP address. Google’s defenses analyze your entire connection fingerprint to detect automation. Your IP is just one signal among hundreds.

Here are the real reasons your scraper is getting flagged:

Browser Fingerprinting: Headless browsers can be detected. Google analyzes signals like WebGL rendering parameters, installed fonts, and Canvas fingerprinting to build a unique signature of your client. A scraper running on a Linux server has a fundamentally different fingerprint than a consumer on a Windows machine, and this mismatch exposes you.

TLS/JA3 Fingerprints: You are fingerprinted during the initial TLS handshake, before any HTTP request is sent. The specific combination of SSL/TLS libraries and cipher suites offered by your client creates a JA3 fingerprint. A Python

requestslibrary has a different JA3 signature than a Chrome browser. Sending a ChromeUser-Agentheader with a Python TLS fingerprint is an immediate giveaway.Header Entropy & Client Hints: The order and casing of your HTTP headers matter. Real browsers send headers in a consistent order; many automation tools do not. Furthermore, modern browsers use Client Hints headers to proactively send device information (like screen resolution). Inconsistent or missing client hints are a clear sign of a non-standard client.

ASN Reputation: Not all residential proxy pools are clean. Some providers mix in low-quality IPs from ASNs known for abuse. If your requests come from a "bad neighborhood," you inherit that poor reputation, and your success rate will plummet regardless of your other evasion techniques.

Bad Rotation Logic: As mentioned, rotating IPs too frequently is an unnatural pattern. So is keeping one for too long. Effective scraping requires intelligent session management that mimics human behavior—browsing for a few minutes, then getting a new IP. Our engineer's guide to proxy rotators) dives into the operational logic required.

Real-World Use Cases (With Constraints)

The right proxy strategy depends entirely on the operational constraints of your use case.

AI and Machine Learning Model Training

Why Proxies Are Required: Training a computer vision model requires millions of diverse images. This scale is impossible without a massive, distributed scraping architecture to avoid rate limits and IP bans. The sheer volume of data is staggering; Google handles 8.5 billion searches daily, and a significant portion involves images. This visual data is critical, with top-ranking images known to drive 40-60% more engagement. You can read more about the impact of image scraping on business intelligence here.

What Proxy Type Actually Works: A large, rotating pool of residential proxies is the only viable option. Concurrency is key, so you need a provider without restrictive thread limits.

What Fails at Scale: Using a small block of IPs or datacenter proxies will result in a near-instant ban. Teams also fail to account for the post-scraping data pipeline needed to process, validate, and store millions of image files and their associated metadata.

E-commerce Intelligence and Trend Analysis

Why Proxies Are Required: To monitor competitor product imagery and visual trends in different markets, you need to see search results as a local user would.

What Proxy Type Actually Works: Geo-targeted residential proxies are mandatory. If you're analyzing the German market, your requests must originate from German IPs. Sticky sessions are also critical for mimicking a user browsing multiple product pages.

What Fails at Scale: Using a generic proxy pool without country or city-level targeting provides useless, non-localized data. Also, only scraping organic results while ignoring the richer data in Google Shopping image results is a common oversight.

SEO and Ad Verification

Why Proxies Are Required: SEO agencies need a neutral, non-personalized view of image SERPs to accurately track rankings and verify ad placements for clients.

What Proxy Type Actually Works: A combination of clean ISP proxies for their speed and stability, supplemented by residential proxies for tasks requiring higher anonymity.

What Fails at Scale: Relying on cheap, shared proxies that are already on blacklists. These serve polluted data or constant CAPTCHAs, leading to flawed client strategies. Teams also underestimate the impact of cookies and session contamination, which leads to personalized results tainting their data. Our overview of web scraping proxies details these nuances further.

How to Choose the Right Setup

A functional script isn't a production system. To reliably scrape Google Images at scale, you must architect a resilient pipeline that treats failure as a predictable event, not an exception.

Here are the decision rules for building your infrastructure:

Budget vs. Reliability: If your project cannot tolerate failure, invest in a premium residential proxy pool. The higher cost is offset by higher success rates and reduced engineering time spent fighting blocks. If you need speed and stability for longer sessions, high-quality ISP proxies offer a better balance. Don't even consider datacenter proxies.

Common Buying Mistakes: The biggest mistake is buying based on the lowest price per gigabyte. Look for providers with clean, ethically sourced IP pools, flexible rotation options (sticky sessions), and granular geo-targeting. Another error is failing to test a provider's pool against your specific target.

When NOT to Use Rotating Proxies: If your task involves interacting with an account-based service where session consistency is paramount, aggressive rotation will get you locked out. For these cases, a single, static residential or ISP proxy is the correct tool.

Building a durable data asset requires moving beyond simple scripts. If you’re serious, learning how to build a data pipeline is the critical next step.

FAQ

Here are the no-nonsense answers to the questions data engineers ask most often.

Is it legal to scrape Google Images?

Scraping publicly available data is generally permissible, but the legalities are complex and jurisdiction-dependent. The primary legal risks arise from scraping copyrighted material (the images themselves), personal data, or content behind a login. The responsibility for compliance falls on you. This guide is for educational purposes; consult with legal counsel to ensure your specific project is compliant.

Why can't I just use a VPN?

A VPN is the wrong tool for this job. It provides a single, static IP designed for user privacy, not automation. That one IP will be identified and blocked by Google almost immediately. A rotating proxy service gives you access to a pool of thousands or millions of IPs, allowing you to distribute requests and mimic organic traffic patterns at scale.

What’s the risk of using free proxies?

Free proxies are a liability. They are slow, unreliable, and almost certainly already blacklisted by Google. More critically, they pose a massive security threat. The operators of these free servers can monitor your traffic, inject malicious code, or steal your data. The minimal cost of a premium proxy service is negligible compared to the operational and security risks of free alternatives.

How much should I budget for proxies?

Cost depends entirely on scale and proxy type. Residential proxies are typically priced per gigabyte of bandwidth. A small-scale project might cost under $100/month, while an enterprise-level data extraction operation can scale into thousands. The correct way to evaluate cost is to measure it against the cost of failure: engineering hours spent debugging blocks, corrupted data, and project delays will always exceed the investment in proper infrastructure.

Ready to build a scraping pipeline that actually delivers? HypeProxies provides the premium residential and ISP proxy infrastructure you need to get clean, consistent data from Google Images without the constant headaches.

Share on

$1 one-time verification. Unlock your trial today.

Stay in the loop

Subscribe to our newsletter for the latest updates, product news, and more.

No spam. Unsubscribe at anytime.