How to Scrape Google Search Results Without Getting Blocked

If you’re reading this, you’ve probably wasted days on guides that deliver a Python script that gets blocked on the tenth request. Let’s be clear: scraping Google search results is a serious data engineering challenge, not a weekend project for requests and BeautifulSoup. Most tutorials are fundamentally broken because they ignore the reality of anti-bot systems.

Gunnar

Last updated -

Feb 2, 2026

Why Hype Proxies

This guide is different. We operate a proxy network that serves billions of requests monthly, giving us a front-row seat to the common failure modes that burn through budgets and tank projects. We're skipping the fluff to give you the operational playbook for building a Google scraping pipeline that doesn't collapse at scale. You'll learn the why behind the strategies that actually hold up in production.

What is Google Search Scraping?

Google search scraping is the automated process of sending queries to Google and extracting data from the Search Engine Results Pages (SERPs). This isn't about simple HTML parsing; it's a discipline focused on emulating human behavior at scale to retrieve rankings, SERP features, pricing data, and competitive intelligence without triggering anti-bot defenses.

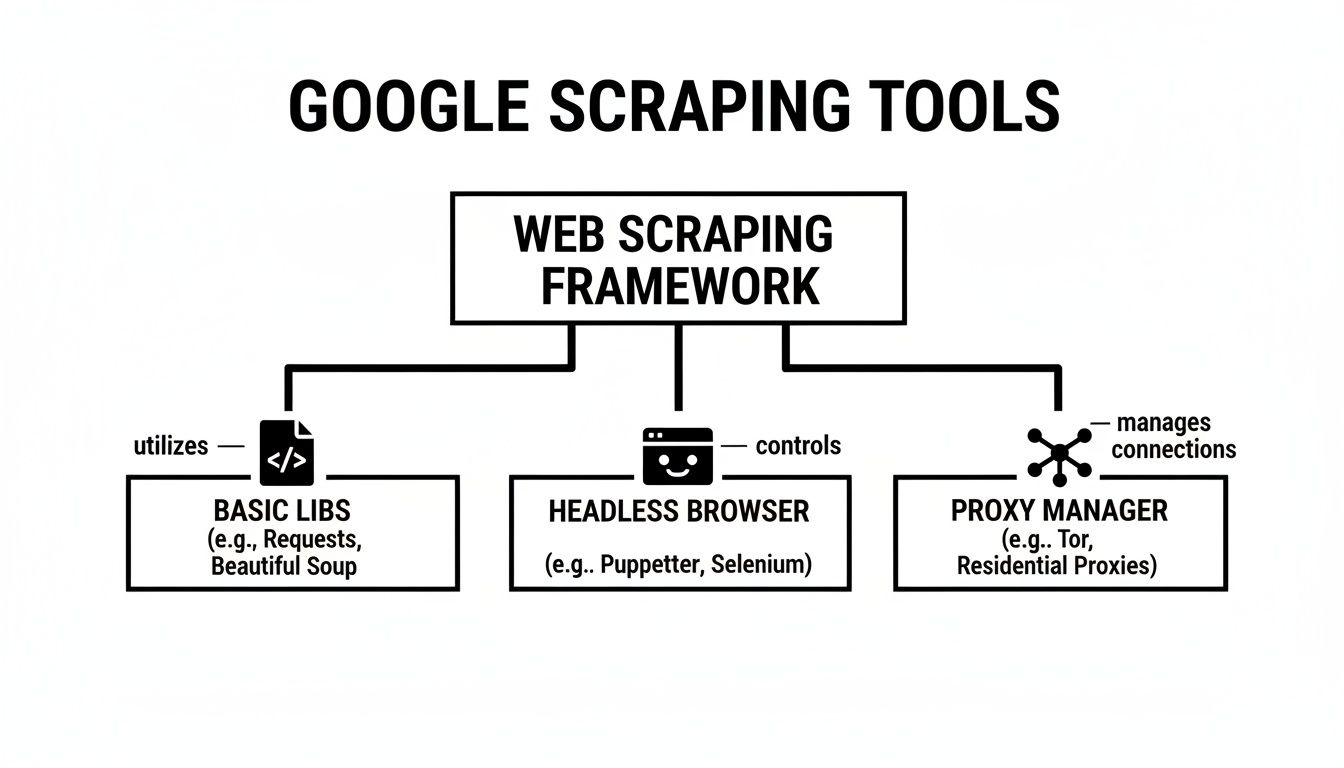

How Google Scraping Actually Works: An Operator's Perspective

At its core, scraping Google involves sending an HTTP request and parsing the HTML response. The simplicity ends there. Success hinges on mastering the mechanics of how your requests are perceived by Google's defense systems, which is where most operations fail.

This is a game of managing signatures and sessions. Get it wrong, and your entire IP pool gets burned.

Rotation Methods: The most common mistake is rotating IPs on every single request. This per-request rotation is an immediate red flag, as no real user teleports across the globe between clicks. The correct approach is session-based rotation, where a single IP (a "sticky" session) is maintained for a logical user journey, such as paginating through multiple results pages. Our engineer's guide to proxy IP rotators) details why this logic is critical.

Sticky Session Pitfalls: While necessary, sticky sessions have their own failure modes. Holding an IP for too long, especially if it's making many requests, increases its risk score. The optimal session duration is a balancing act—long enough to appear human, but short enough to avoid accumulating a bad reputation. A common mistake is using a single sticky IP for hundreds of queries, which guarantees it will be flagged.

IP Pool Health & Reuse: Not all IPs are created equal. The history of an IP address matters. When you use a proxy service, you're often sharing IPs with other users. If the pool is poorly managed, you could be assigned an IP that was just flagged for abuse by another user, inheriting their bad reputation. Quality providers quarantine IPs after they encounter blocks or CAPTCHAs, letting them cool down before reintroducing them to the pool.

Concurrency and Rate Limiting: Sending too many requests simultaneously (high concurrency) from a single IP is a rookie mistake. However, even with a large IP pool, an unnaturally consistent request rate (e.g., exactly one query every 2.0 seconds) is easily detectable. Real user traffic is bursty and unpredictable. Effective scraping requires randomized delays and jitter to mimic this organic pattern.

Proxy Types & Tradeoffs for Scraping Google

Your choice of proxy is the single most important decision you'll make. It dictates your success rate, budget, and the complexity of your scraping stack. Assume the reader already knows what a proxy server is and how it works; the real question is what actually works for a target like Google.

Proxy Type | When It Works | When It Fails (At Scale) | Cost vs. Success Tradeoff |

|---|---|---|---|

Datacenter | Low-stakes, low-volume scraping of less sophisticated websites. Basic IP masking for non-critical tasks. | Almost immediately against Google. Datacenter ASNs are well-known and heavily scrutinized, leading to instant blocks or CAPTCHAs. | Low Cost, Very Low Success. The savings are a false economy, as you'll waste engineering hours and get unreliable data. |

Residential | High-volume, high-success-rate scraping of sophisticated targets like Google. The IPs belong to real consumer ISPs, carrying a high trust score. | Can fail if the IP pool is low quality, overused, or if rotation logic is poor. A bad provider can sell you residential IPs that behave like datacenter IPs. | High Cost, High Success. The gold standard. The cost is justified by clean data, fewer blocks, and reduced maintenance overhead. |

ISP (Static Residential) | Scenarios requiring a stable, high-trust IP for an entire session, like managing an account or multi-step checkout processes. | Overkill for basic SERP scraping where rotation is key. Using a single static IP for thousands of queries will eventually get it flagged. | Very High Cost, Niche Success. Less of a scraping tool and more for specific automation tasks requiring session persistence from a trusted IP. |

Why You’re Still Getting Blocked (It's Not Just Your Proxy)

If you've invested in quality residential proxies and are still facing blocks, the problem isn't the IP address—it's the rest of your digital fingerprint. Google's anti-bot systems analyze hundreds of data points to build a trust score for every connection. Your goal is to make your scraper's fingerprint statistically indistinguishable from a real user's browser.

This is where 90% of scraping operations fail. They focus on the IP and ignore the more subtle, but equally critical, detection vectors.

Browser Fingerprinting: Headless browsers like Puppeteer and Playwright leak dozens of automation tells. Things like

navigator.webdriver, missing browser plugins, inconsistent font rendering (canvas fingerprinting), and specific WebGL parameters scream "bot." Hardening a headless browser is a constant cat-and-mouse game of patching these leaks.TLS/JA3 and Client Hints: Before your HTTP request is even sent, the TLS handshake creates a signature (a JA3 fingerprint). Standard Python libraries have a non-browser fingerprint that is an instant giveaway. Furthermore, modern browsers send Client Hints, which provide granular data about the device and browser version. If your User-Agent claims you're Chrome 108 on Windows but your TLS fingerprint and Client Hints match a Linux server running Python, you're caught.

Header Entropy: It's not just what headers you send, but in what order and combination. Real browsers send a specific, consistent set of headers. An incomplete or improperly ordered header block is a clear sign of an unsophisticated script.

ASN Reputation: The Autonomous System Number (ASN) reveals the network owner of your IP. Proxies from datacenters or cloud providers (AWS, Google Cloud) have ASNs with poor reputations for scraping. Your requests start with a low trust score by default, making you an easy target for blocks. Residential and ISP proxies use ASNs belonging to consumer internet providers, which are inherently more trusted.

Bad Rotation Logic: As mentioned, rotating your IP on every request is a massive red flag. Conversely, using a single sticky IP for too many rapid-fire requests will also get it burned. The logic must mimic human behavior: one IP for one logical task or session, then a new IP for the next. Check out our guide on integrating Playwright with proxies for practical implementation details.

Real-World Use Cases (And What Actually Works)

The right proxy strategy depends entirely on the specific data you're after. Generic advice is useless; here’s what we see work in production for common use cases.

1. SEO Rank Tracking at Scale

Why Proxies are Required: To get accurate, geo-specific rankings for thousands of keywords, you need to simulate searches from different locations without being personalized or blocked.

What Proxy Type Works: High-quality, geo-targeted residential proxies. The ability to specify a country or city is non-negotiable for accurate local SERP data.

What Fails at Scale: Datacenter proxies. They are quickly detected, leading to inaccurate or missing data. Using a single residential IP for too many keywords also leads to personalization and skewed results. Teams often underestimate the need for clean, targeted IPs for each distinct location they track.

What Teams Underestimate: The complexity of parsing. SERP layouts change constantly. A scraper that isn't built with resilient selectors (targeting data attributes instead of CSS classes) will break weekly. For example, tracking AI search tracker tools requires parsers that can handle dynamic, non-standard SERP features.

2. Ad Verification

Why Proxies are Required: To verify that ads are being displayed correctly in different regions and on different devices, without triggering fraud detection systems.

What Proxy Type Works: A mix of residential and mobile proxies. Mobile IPs are critical for verifying mobile-specific ad campaigns and carry an extremely high trust score.

What Fails at Scale: Any proxy type without precise geo-targeting. Using IPs from the wrong country or region will show the wrong ads, making the entire dataset useless. Google's ad systems have their own sophisticated bot detection, which will easily flag low-quality proxies.

What Teams Underestimate: Cookie and session management. Ad display is heavily influenced by user history. Each verification check must be done in a clean, isolated browser context with a fresh IP to avoid session contamination.

3. Market Research & Price Monitoring

Why Proxies are Required: To collect product and pricing data from Google Shopping and organic results without receiving biased, personalized prices or being blocked.

What Proxy Type Works: Large pools of rotating residential proxies. A large number of IPs is needed to distribute requests and avoid triggering rate limits on any single IP.

What Fails at Scale: Small proxy pools or slow rotation. Sending thousands of queries from a limited set of IPs will quickly lead to CAPTCHAs and blocks. You can find more on these Google search statistics here, which highlight the massive scale you're competing against.

What Teams Underestimate: The need for headless browsers. Google Shopping results are rendered dynamically. Using a simple HTTP client will miss most of the product data. This adds significant infrastructure cost and complexity.

How to Choose the Right Scraping Setup

Choosing the right setup is a tradeoff between cost, success rate, and engineering overhead. There is no single "best" solution.

Decision Rules:

If your target is Google, Datacenter proxies are not an option. Start with residential proxies. The debate is over.

If you need geo-specific data, your provider must offer country and city-level targeting. Verify this before you buy.

If you are scraping dynamic, JavaScript-heavy content (like AI Overviews or Shopping results), you must use a headless browser like Puppeteer or Playwright. A simple HTTP client will not work. We have a guide on integrating Puppeteer with proxies that covers the practicalities.

If your scraping tasks involve multi-step processes, you need sticky sessions. Ensure your proxy provider offers reliable session control.

Budget vs. Reliability

The single biggest buying mistake is trying to save money on proxies. A cheap proxy service that delivers a 50% success rate is more expensive than a premium service with a 99% success rate. The cost of failed requests, corrupted data, and wasted developer time debugging blocks will always exceed the initial savings. Budget for quality residential IPs from a reputable provider. You can learn more about how to use residential proxies in our detailed guide.

When NOT to Use Rotating Proxies

Rotating proxies are the wrong tool when you need a consistent identity over a long period. For tasks like social media account management or maintaining a persistent shopping cart, you should use a dedicated ISP or static residential proxy. Using a rotating proxy for these tasks will trigger security alerts and get the account locked.

Navigating the Legal and Ethical Guardrails

While technically possible, scraping Google operates in a legal and ethical gray area. Ignoring the rules is a significant business risk. The landmark hiQ Labs v. LinkedIn case established that scraping publicly available data does not violate the CFAA, but this is not a free pass.

Google's Terms of Service explicitly forbid automated access. While the ToS is not law, violating it gives them grounds to block you. The robots.txt file is another clear directive. Ignoring it is a sign of bad faith.

The operational rule is simple: be a good internet citizen.

Identify Yourself: Use a custom User-Agent that identifies your scraper and provides a contact method.

Go Slow: Implement randomized delays to avoid hammering their servers.

Don't Scrape PII: Never collect personally identifiable information.

Cache Responses: Don't scrape the same page twice if you don't have to.

Understanding SERP structure, such as identifying SERP Feature Opportunities, is part of responsible scraping, as it allows you to target only the data you need, minimizing your footprint.

FAQ: Straight Answers to Common Google Scraping Questions

We field questions about scraping Google constantly. Here are the no-fluff answers.

Is scraping Google legal?

It's complicated. Scraping public data is generally not illegal, thanks to cases like hiQ Labs v. LinkedIn. However, it is a direct violation of Google's Terms of Service, which gives them the right to block you. The key is to scrape responsibly, avoid PII, and don't overload their servers.

What's the real difference between a proxy and a VPN for scraping?

A VPN is for personal privacy; it routes all your traffic through a single, static IP. It is terrible for scraping and easily blocked. A proxy network is built for automation, giving you access to a massive pool of IPs that can be rotated to simulate thousands of different users, which is essential for avoiding detection.

Why shouldn't I use free proxies?

Free proxies are a false economy. They are slow, unreliable, and blacklisted everywhere. They are also a massive security risk, as the operators can monitor or manipulate your traffic. Using them for any serious project will result in failure and potential data breaches.

How much should I budget for proxies to scrape Google?

Forget cheap datacenter proxies. For reliable Google scraping, you need to budget for premium residential or ISP proxies. Costs are typically based on bandwidth usage. A serious operation should expect to budget anywhere from $300 to $1,000+ per month to get started. Anything less is likely to result in poor success rates and wasted engineering time.

When are rotating proxies the wrong tool?

Rotating proxies are wrong when you need a stable identity. For tasks like managing an online account, completing a multi-step form, or any action where changing your IP mid-session would trigger security flags, you need a static IP from an ISP or static residential proxy.

Ready to build a scraping infrastructure that actually works? The HypeProxies network is engineered for high-success-rate data collection against the toughest targets. Stop fighting with blockades and get the clean, consistent data you need. Explore our residential and ISP proxy solutions.

Share on

$1 one-time verification. Unlock your trial today.

Stay in the loop

Subscribe to our newsletter for the latest updates, product news, and more.

No spam. Unsubscribe at anytime.